Welcome back to this series on building threat hunting tools!

In this series, I will be showcasing a variety of threat hunting tools which you can use to hunt for threats, automate tedious processes, and extend to create your own toolkit! The majority of these tools will be simple with a focus on being easy to understand and implement. This is so that you, the reader, can learn from these tools and begin to develop your own.

There will be no cookie-cutter tutorial on programming fundamentals, instead, this series will focus on the practical implementation of scripting/programming through small projects. It is encouraged that you play with these scripts, figure out ways to break or extend them, and try to improve on their basic design to fit your needs. I find this the best way to learn any new programming language/concept and, certainly, the best way to derive value!

In this instalment, we will be diving into the topic of web scraping and learn how we can create our own web scraping tool to gather threat intelligence.

What is Web Scraping?

Web scraping is the process of automatically extracting data from websites. This typically involves sending HTTP requests to a website’s server, parsing the HTML or XML content of the response, and then extracting the relevant data using specific patterns or rules. This data is then stored in a structured format such as a database or spreadsheet where it can be analyzed or used.

To perform web scraping in Python we will be using Beautiful Soup. This is a Python library for web scraping that provides a simple and intuitive way to parse HTML and XML documents. It allows developers to extract data from websites by traversing the document tree, searching for specific tags or attributes, and manipulating the data as needed. It is widely used in web scraping projects because it is easy to learn and use, and it can handle various parsing tasks with minimal code.

When performing web scraping it is important to respect the website’s terms of service and avoid overloading the server with requests. Failure to do so can be considered unethical or even illegal in some cases.

The Problem

To demonstrate web scraping we are going to do something common in the world of threat intelligence, keeping track of high severity vulnerabilities (vulnerabilities with a CVSS base score of 7.0–10.0). These vulnerabilities can often lead to Remote Code Execution (RCE) and provide an attacker initial access to a system. As such, it is crucial to stay on top of these vulnerabilities and make sure they are passed on to the relevant party in your organization who is in charge of patching.

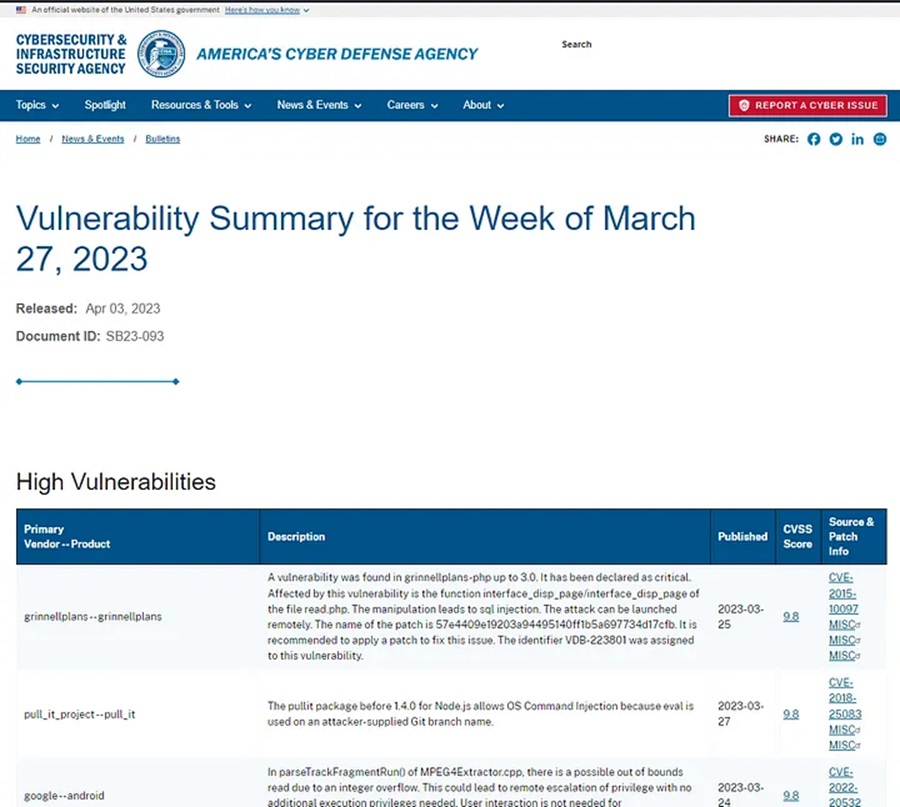

The US-based Cybersecurity and Infrastructure Security Agency (CISA) maintains a weekly summary of vulnerabilities which it posts as a bulletin on its website. As a threat intelligence analyst, we could manually go through this weekly bulletin and pick out the high severity vulnerabilities that we need to investigate; however, this would be a tedious and time consuming process. Instead, we can use a bit of web scraping to extract the relevant details and pass these into a CSV file that we can email to our vulnerability patching colleagues. Let’s begin!

The Solution

To start we can look at the web page we will be scraping:

This page divides vulnerabilities into four tables based on severity; High Vulnerabilities, Medium Vulnerabilities, Low Vulnerabilities, and Severity Not Yet Assigned. We will be focusing on the High Vulnerabilities table, which is the first table on the page. To capture this table we first need to make a request to download this page and use Beautiful Soup’s html.parser to parse the HTML returned by our request.

# import necessary Python modules

import requests, csv

from bs4 import BeautifulSoup

# download the page

page = requests.get("https://www.cisa.gov/news-events/bulletins/sb23-100")

# parse the page with Beautiful Soup

soup = BeautifulSoup(page.content, "html.parser")Next, let’s set up some variables that we will use for output. These will be PAGE_TITLE (the title of the web page) and FILENAME (the name of the CSV file we will create with the vulnerability information).

# variables for output

PAGE_TITLE = soup.title.string

a = soup.title.string.split("of")

b = a[1].split("|")

FILENAME = "CISA vulnerabilities - " + b[0].strip() + ".csv"Notice how a bit of Python string manipulation is required here to just get the date of the report from the page title.

You can read more on Python’s string methods here.

Let’s move on to capturing the information stored in the High Vulnerabilities table. This is the first table on the page so we can find the first occurrence of the table HTML tag (<table>), then move into the body of this table using the tbody tag (<tbody>), and finally, find all the table rows using the tr tags (<tr>). We will store all these table rows in a list (rows variable).

# capture high vulnerabilities table

table = soup.find("table")

table_body = table.find("tbody")

rows = table_body.find_all("tr")Once we have the rows, we now need to find a way to extract all the relevant information and store this somewhere so we can then transfer it to a CSV file. To solve this, I created a list called vulns to store Python dictionary objects that contain vulnerability information in the form of key:value pairs. The key is the name of the field we want to add to the CSV file and the value is what we want to extract from the HTML table row.

The following for loop iterates through all the rows stored in the rows variable and finds the table data stored in the HTML td tag (<td>). It stores the information contained in the table data tags in the cols variable (columns) using a Python list comprehension.

# list to hold vulnerability dictionaries

vulns = []

# loop through table rows

for row in rows:

# create table columns

cols = [x for x in row.find_all("td")]With the table columns stored, we can now move on to extracting the data we want from these columns. To do this, we can index the value stored in the list of columns (table data tags) and perform some Python text manipulation. For the first column (cols[0]) we extract the text property stored here and split it based on the -- character pair. This gives us the product name and the vendor name for the vulnerability.

The other fields are simpler to extract as we just need to get the text property and use the Python string method .strip() to remove whitespaces. For the cve field we need to find the anchor tag (<a>) and extract the text property, whilst for the reference field we need to extract the link at this anchor tag (stored in its href attribute).

# extract relevant fields

product, vendor = cols[0].text.split("--")

description = cols[1].text.strip()

published = cols[2].text.strip()

cvss = cols[3].text.strip()

cve = cols[4].find("a").text.strip()

reference = cols[4].find("a").get("href")Once we have extracted this data we can create our Python dictionary object (named vuln) and store the data as key:value pairs. Finally, we can append this dictionary to our list of vulnerabilities that we are storing in the vulns Python list.

# store fields as a dictionary object

vuln = {

"product": product.strip(),

"vendor": vendor.strip(),

"description": description,

"published": published,

"cvss": cvss,

"cve": cve,

"reference": reference

}

# append dictionary object to vulnerability list

vulns.append(vuln)With all the data extracted from the web page, we can now begin writing it to a CSV file for easy data analysis. To do this, we first need to create our CSV file header row that contains the headers for each data column.

# CSV file header row

header_row = ["Product", "Vendor", "Description", "Published", "CVSS", "CVE", "Reference"]Next, we can create a new CSV file, using the FILENAME variable already created, by making use of a Python context manager (the with statement):

# create a CSV file

with open(FILENAME, "w", encoding='UTF8', newline='') as f:

…Note, this file is opened using write mode (w), uses UTF-8 encoding, and sets the newline character as blank (this is important to not get a newline between each row write operation).

Once the file has been opened we can create a CSV writer object and use this to write data to the file.

# create csv writer to write data to file

writer = csv.writer(f)

# write header row

writer.writerow(header_row)

# write vulnerabilities

for vuln in vulns:

data_row = [vuln['product'], vuln['vendor'], vuln['description'], vuln['published'],vuln['cvss'], vuln["cve"], vuln['reference']]

writer.writerow(data_row)Here we use a Python for loop to loop through the vulnerabilities stored as Python dictionaries in the vulns list variable. We match the relevant dictionary key for each vulnerability to align with the header row we previously created. This data is stored in a new list (data_row) that is then written to the CSV file we have opened using the CSV writer object (writer).

The context manager will automatically close the file we created so all we need to do now is indicate to the user that the script is completed by printing the web page title and filename using the variables we previously created.

print(f"Printed {PAGE_TITLE}")

print(f"-> see {FILENAME}")The full script can be found on GitHub and will look like this:

# import necessary Python modules

import requests, csv

from bs4 import BeautifulSoup

# download the page

WEB_PAGE = "https://www.cisa.gov/news-events/bulletins/sb23-100"

page = requests.get(WEB_PAGE)

# parse the page with Beautiful Soup

soup = BeautifulSoup(page.content, "html.parser")

# variables for output

PAGE_TITLE = soup.title.string

a = soup.title.string.split("of")

b = a[1].split("|")

FILENAME = "CISA vulnerabilties - " + b[0].strip() + ".csv"

# capture high vulnerabilities table

table = soup.find("table")

table_body = table.find("tbody")

rows = table_body.find_all("tr")

# list to hold vulnerability dictionaries

vulns = []

# loop through table rows

for row in rows:

# create table columns

cols = [x for x in row.find_all("td")]

# extract relevant fields

product, vendor = cols[0].text.split("--")

description = cols[1].text.strip()

published = cols[2].text.strip()

cvss = cols[3].text.strip()

cve = cols[4].find("a").text.strip()

reference = cols[4].find("a").get("href")

# store fields as a dictionary object

vuln = {

"product": product.strip(),

"vendor": vendor.strip(),

"description": description,

"published": published,

"cvss": cvss,

"cve": cve,

"reference": reference

}

# append dictionary object to vulnerability list

vulns.append(vuln)

# CSV file header row

header_row = ["Product", "Vendor", "Description", "Published", "CVSS", "CVE", "Reference"]

# create a CSV file

with open(FILENAME, "w", encoding='UTF8', newline='') as f:

# create csv writer to write data to file

writer = csv.writer(f)

# write header row

writer.writerow(header_row)

# write vulnerabilities

for vuln in vulns:

data_row = [vuln['product'], vuln['vendor'], vuln['description'], vuln['published'],vuln['cvss'], vuln["cve"], vuln['reference']]

writer.writerow(data_row)

print(f"Printed {PAGE_TITLE}")

print(f"-> see {FILENAME}")This script can be used to extract any of the high vulnerabilities found on CISA’s security bulletin site. Simply select the weekly vulnerability summary you want to scrape data from and copy the URL into the WEB_PAGE variable at the top of this script. Run the script and you will have a nicely formatted list of top vulnerabilities for said week!

There are many ways this script can be improved or adapted. For instance, you could improve this script by using Object Oriented Programming (OOP) to make each vulnerability its own object or adapt this script to scrape another webpage that lists vulnerabilities. These opportunities are left to you to explore!

Next time in this series we will look at using APIs but until then, happy hunting!

Discover more in the Python Threat Hunting Tools series!