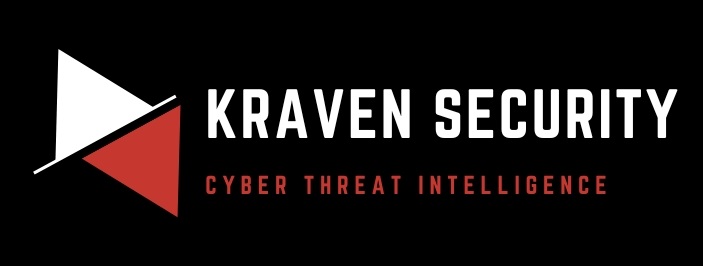

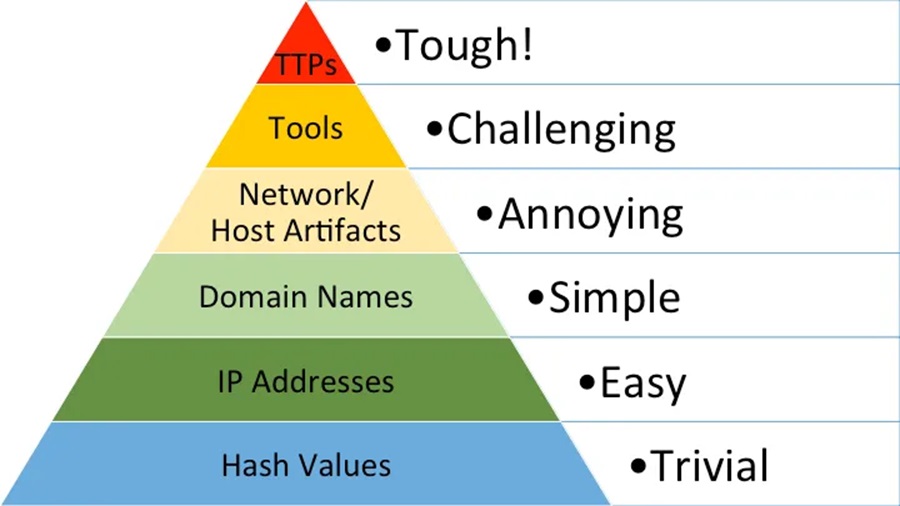

Today we will be taking a look at “The Pyramid of Pain” and how you can make bad guys cry.

The pyramid is an illustration that shows how some IOCs (Indicators of Compromise) are more difficult for an adversary to change than others. It’s creator, David J Bianco, claims that denying the adversary certain indicators causes them a greater loss (more pain) than denying them others. Because of this, a defender should aim to target the indicators that cause a greater loss to the adversary. This loss is the time it would take an adversary to reproduce the IOC. The pyramid has become a cornerstone of many Cyber Threat Intelligence (CTI) teams and platforms, with it’s use guiding many security architects in their deployment of security solutions.

To understand the pyramid, and why it is so important, we must first consider what an IOC is and how they are used by defenders.

What is an Indicator of Compromise?

According to CrowdStrike an IOC is “a piece of digital forensics that suggests that an endpoint or network may have been breached” and these clues can aid a security professional in determining if malicious activity has occurred. A defender will use IOCs in three ways:

- They will use an IOC to determine if an endpoint or network has been compromised. This happens during Digital Forensic Investigation & Response (DFIR) operations, where a DFIR team will search through log files and machines looking for indicators of a compromise.

- They will use an IOC to block the “known” bad from interacting with their endpoints and networks. For instance, if they know an certain IP address is associated with a ransomware campaign then they will block it from connecting to their external servers. This was how traditional Anti-Virus (AV) products worked. They had a long list of hashes which were known to be malicious and would block files with this hash from executing on a machine.

- They will use an IOC to perform IOC-based hunting. This form of threat hunting involves ingesting a threat intelligence report, which contains the latest IOCs threat intelligence vendors are seeing infecting or targeting environments, and then actively searching for these IOCs in their estate.

IOCs play a key part in any defender’s cyber security strategy but not all IOCs are created equal, and this is where the pyramid of pain comes in.

The Pyramid of Pain

The pyramid consists of six layers of IOCs which grow in how much pain it causes an adversary to change them.

1. Hash Value: This is the alpha-numeric value that is generated by applying a mathematical algorithm to a file (e.g. MD5, SHA1, SHA256). They are used to provide unique references to specific malware or files seen during a breach/incident. Unfortunately, they can be easily changed by making a small adjustment to a malware’s source code (and then recompiling) or adding a single character to a file.

2. IP Addresses: These are numeric identifies for Internet locations (e.g. servers connect to the Internet). Adversaries will launch their attacks from servers connected to the Internet and these servers will have an associated IP address. An attacker can change their IP address with relative ease by using a proxy server or simply renewing their IP address with their ISP provider.

3. Domain Names: These are the alpha-numeric (human readable) identifies for Internet locations (e.g. malware.com) and are associated with IP addresses. You need to register a domain name with a DNS provider so they are slightly more painful to change as there is a small cost involved and malware will need to be re-programmed to call-back to a different domain name.

4. Network / Host Artifacts: These are artifacts (files, registry keys, logs, etc.) a piece of malware or a threat actor will leave behind once they have compromised a system/network. These artifacts are more difficult for a threat actor to hide. For instance, a specific registry key may be changed to make the malware persistent on an infected device. This is harder to change, but the bad guy could ultimately use another persistence mechanism, or even just another registry key, to achieve the same result.

5. Tools: These are the specific tools a threat actor may use. For instance, they could use the tool certutil.exe to perform file transforms and download malware onto a system. If you block this tool’s execution in your environment then they would need to find a completely different tool to download their malware. Hence, they’d need to rethink their post-exploitation strategy.

6. TTPs: These are the tactic/techniques/procedures an adversary uses to compromise a system and breach an environment. For example, phishing emails are a common TTP that threat actors use. Targeting this TTP and eliminating phishing as an initial access vector (something considerably easier said than done) would require a threat actor to develop a new initial access method. This would cause them significant pain as they would need to invest resources into developing this new initial access method and, for the vast majority of threat actors, they will simply move onto the next target.

How to Use the Pyramid of Pain?

With the pyramid defined it is important to consider how we, as defenders, can use the pyramid to make our organisation more secure.

The more pain it causes an adversary to change an IOC the less likely they are to. As such, if a defender is able to detect and react to IOCs higher up the pyramid then the less likely they are to succumb to an adversary who makes use of them.

For example, If I write a detection rule to block a specific hacking tool’s file hash, then great I can block this one specific version of the tool and I am safe for today. What if tomorrow the bad guy simply adds a few strings and recompiles the tool? Now my detection rule will no longer work and their attack will be successful. What if this tool always writes a specific file to the TEMP directory on Windows machines as part of it’s execution, I could write a rule to detect that and now we’ve moved up the pyramid and the adversary will now need to use another tool, or re-write their current tool to evade this, detection. Most adversaries won’t bother to do this and move onto the next target.

The higher up the pyramid of pain your detection rules target, the more likely they are to be at stopping a bad guy. However, writing these detections rules requires the defender to have a deeper understanding of the technical details of how an attack works. The defender needs to know what an attacker is doing, how they are doing it, and how they can detect evidence of this. A defender can’t just stop at “bad guy uses XYZ tool, I will get a hash of this tool and block it”. This mindset is not good enough in today’s cyber security world. Today we need to know how an adversary achieves their goals so whatever tool they use we can detect it.

The MITRE ATT&CK Matrix

This may sound like a daunting task, thankfully there is a CTI tool that can help writing these rules, the MITRE ATT&CK Matrix. This matrix provides a common language for cyber security professionals to share how attackers are comprising systems; their tactics, techniques, and procedures.

You can use this as a framework to map an adversary to specific TTPs and, from this, write detection rules that will alert a defender if these TTPs are seen in their environment. Threat intelligence articles are filled with these MITRE TTPs and threat intelligence analysts will use these articles to better protect their estate from attacks that are being seen in the real-world.

For instance, technique T1566.001 relates to an adversary using a spearphishing attachment to gain initial access to a corporate environment, which a threat intelligence article may detail as a method used by bad guy XYZ.

An analyst may then read this article, conclude that bad guy XYZ is likely to target them, so then focus on writing threat hunting or detection rules that a tailored to detect this initial access method (e.g. office documents spawning CMD or PowerShell processes).

It is important to remember that the MITRE ATT&CK matrix is just a list of known ways threat actors have targeted organisations. A determined adversary who is targeting a specific organisation will likely change their IOCs and, ultimately, develop new TTPs to breach an organisation if their first attempt fails. These adversaries tend to be defined as Advanced Persistence Threat actors (APTs) and, despite a common misconception in cyber, the majority of organisation are unlikely to be on their target list.

In the current cyber security landscape it is much more likely that an organisation will be targeted by a cyber criminal group looking for profit than a nation state or other APT.

Conclusion

The pyramid of pain is a good illustrative tool for cyber security professionals to explain the importance of investing resources into detection methods that look beyond simple file hashes or IP addresses. It’s a concept that helps show less technical individuals the importance of writing well thought out detection and hunting rules that go beyond the basics.

Although these rules may require greater knowledge, they will inflict greater “pain” on an adversary and keep an organisation safer for longer. Use it the next time you need to talk to someone in a suit about what you need a bigger budget for cyber security.